a Homeric odyssey

Like everyone else of a certain sort (on line and writerly), I’ve been playing with ChatGPT.

It’s a “large language model”, trained on a monstrous corpus of text, that basically projects (based on stuff people have said before) what might come next given a sequence of text. It doesn’t really “understand” it in a way that we understand “understanding”, but it’s pretty good at this point in saying stuff that sounds plausible and is sometimes useful.

As a writer, I’m fascinated by the mystery of “what comes next” out of my own writer’s brain.

Me:

What is the connection between Homer’s Odyssey and Homer Simpson?

ChatGPT:

Homer Simpson, the main character in the popular American animated sitcom “The Simpsons,” is named after the ancient Greek poet Homer, who wrote the epic poems “Iliad” and “Odyssey.” The name “Homer” is a reference to the epic poet, and the character’s traits and experiences often reflect elements from the stories in the “Odyssey.”

That’s pretty good stuff! I have no idea if it’s true or not – sounds like bullshit to me. One of the things we’ve learned in playing with ChatGPT is that large language models are superb bullshitters. I suppose I could Google it.

The fun thing for me as a writer is the “Holy moly, where did that come from?” feeling I sometimes get when I’m sitting at a keyboard and my fingers are flying.

When the work is flowing (which is often, I’m not one of those suffering “Woe writing is hard!” writers), my “writer’s brain” is like a playful, faithful golden retriever finding great sticks for me. I smile and pat it on the head and type up the stick, not much caring how the dog found it or where the next one is coming from.

Sometimes the sticks are weird and misshapen, but I don’t care because my dog brought them to me and they are therefore lovely by default!

Wikipedia and the large language model golden retriever that is my writer’s brain

The weird thing about this is that the my brain/golden retriever distinction is really sometimes how it feels. Like, “Where did that come from?” It’s not some muse or spiritual force. It’s like a pet dog! Which is why playing with large language models is so intriguing. I don’t usually have access to someone else’s golden retriever.

I have no idea if this is right, but as I’m in the business of trafficking in metaphor, I’ll cling to it for at least a moment. Let’s go through an example.

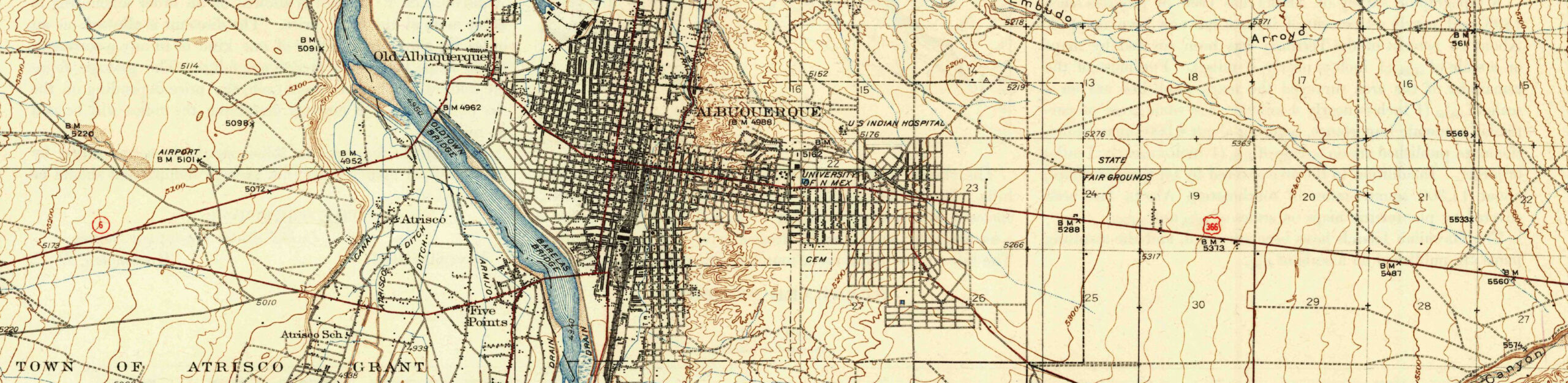

I’d planned to spend the morning working on my new Rio Grande book, but my golden retriever writer’s brain was feeling playful, and I agreed to take it for a walk.

I wanted to do a quick primer for readers about what’s up on the River. The premise was that there’s a bunch of stuff going on simultaneously, which makes solving the Colorado River’s problem a capacity challenge, and the first stick the dog brought me was the “walk and chew gum at the same time” cliche. That’s a nearby stick, I probably could have found it by myself. But I wanted another stick – I love to take a cliche and twist it in a way that makes you notice it. The golden retriever without evening stopping for a pat on the head and a “good dog” zoomed out and returned with Homer’s Odyssey – a long story, recited in an oral tradition, which is hard to do while you’re chewing gum.

Good dog!

This is the fun part. I have no idea where stuff like that comes from! I just smile and type. But the large language model probability thing gives me a nice mental model for what my writer’s brain is up to, sorting through its neural network for “the sort of thing that might come next.”

When my dog brings me a stick, I like to check it out, and my understanding of stuff like ancient Greece, like much of the material I pretend to know, is modest. So I went to the Google. Which gave me, right out of the box, the “Homer’s Odyssey” episode of the Simpsons! (Google’s a good dog – not up to the standards of my golden retriever, you really have to tell it where to look for sticks, but a good dog.)

The “truth” part is the sort of thing ChatGPT isn’t very good at yet – excellent at stringing together words that might reasonably follow, that sound plausible, but not so good about knowing if the words are right. The old journalist’s cliche, “If your mother tells you she loves you, check it out” applies to ChatGPT and also my dog’s sticks. The stodgy, plodding Google search is great to have at moments like this.

The meat of the post was straightforward – short term EIS, longer term EIS, etc. – but the real fun was yet to come.

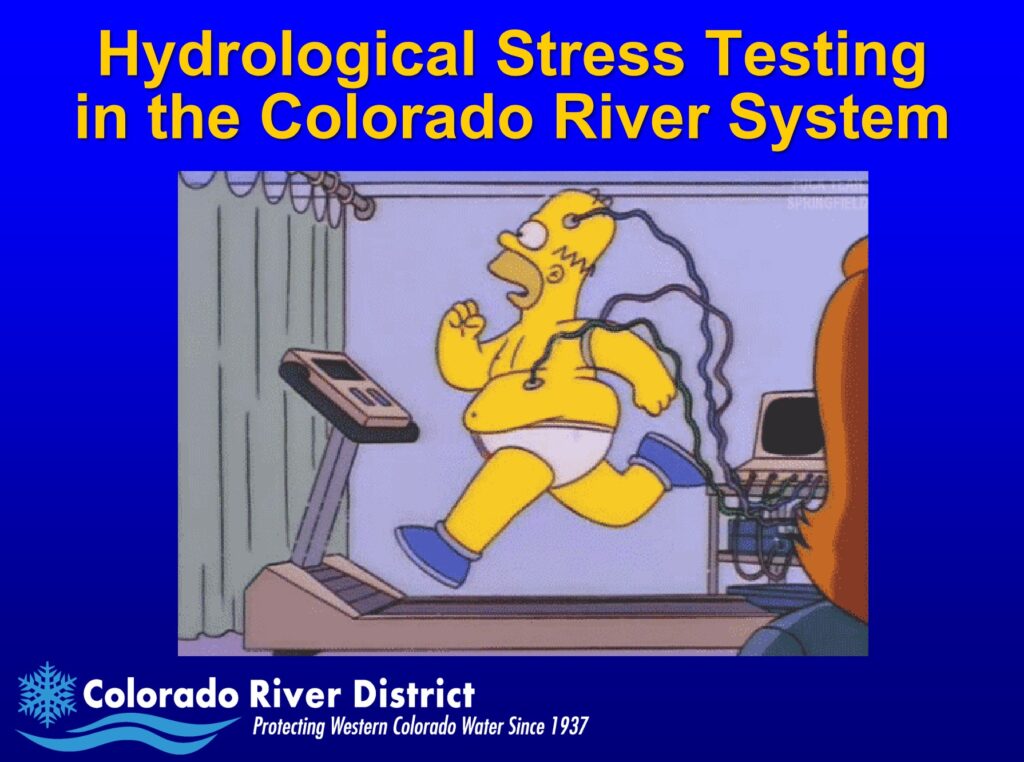

In search of a Homer Simpson kicker, my golden retriever writer brain somehow rummaged up the old 2013 Eric Kuhn/Dave Kanzer* Homer Simpson Colorado River Stress Test CRWUA slide! Again, I’m in admiration of my dog’s fetching skills, like where the hell did you find that?

* I tried calling Eric to confirm, couldn’t reach him, but one of the sticks my retriever fetched was about Dave having something to do with that slide. (Some stuff I can’t Google and have to call Eric.)

** Eric called back. DK was, in fact, instrumental in the Homer Simpson Stress Test Slide, one of the great contributions to the 21st century Colorado River canon.