Jeff Jarvis, who has (for better or worse) become something of a new media guru stalking the land of the old, caused a bit of a stir in my world when he posted last Saturday on the amazing success of the LA Times news web operation:

David Westphal reports an important and historic crossing of the Rubicon for a major newspaper, recounting a discussion with LA Times editor Russ Stanton at USC: “Stanton said the Times’ Web site revenue now exceeds its editorial payroll costs.”

His response?

So why not go ahead and turn off the presses and the trucks and turn the Times into a pure news enterprise, disaggregated from its production and distribution businesses?

That’s frankly breathtaking in its ignorance of the customers the Times is trying to serve. A million people a day (a million!) still fork over money to buy the print product. Those people are LA Times customers.

There are problems with the print product business model, to be sure. But for a supposedly smart business guru to suggest that a company abandon that many paying customers – as opposed to, say, figuring out how to continue selling them a product they seem to want? In what universe does that make any sense at all?

There are important lessons to learn from the LA Times on line success, but abandoning print is not one of them.

I think the argument is that if your execs have only eight hours a day to address two markets (one very profitable but dwindling, the other growing but not very profitable) you’d ideally want them to spend all their time, bandwidth, etc., on the second one (because that is where the business must be in five years), even though it is completely understandable that they’ll focus on the first (since that is where their expertise and revenues are.) This is really the heart of the ‘dilemma’ of Christensen’s innovator’s dilemma. It is completely reasonable (if you’re the LAT) to focus on the existing subscriber base, but while doing that, someone else (lets call him ‘Craig’) will be focusing on that growing market- and therefore when the LAT gets around to that market, Craig will be the expert and the LAT will be a relatively inexperienced newbie. Not surprisingly, they’ll then lose.

I’m fond of newspapers, but this is a *very* tough problem, and it’s going to get worse before it gets better, I’m sorry to say.

I used to use Christensen often to help people do strategy in computer systems, where these effects have generally occurred much faster. There are many lessons for newspapers, but unfortunately, many are not very pleasant.

1) Once upon a time, mainframes [1950s onward] and minicomputers [1960s onward] were bundled systems, with each vendor providing *everything*:

proprietary hardware

proprietary software

service

technical support, including serious domain-expertise specialists

In the US alone, there were a handful of mainframe companies, i.e.;, IBM and the “seven dwarves”. By the 1970s, there were dozens of US minicomputer companies, including larger ones like Digital Equipment Corporation, Data General, Wang, Prime, Hewlett Packard, SDS, Tandem and divisions of IBM, Honeywell, etc.

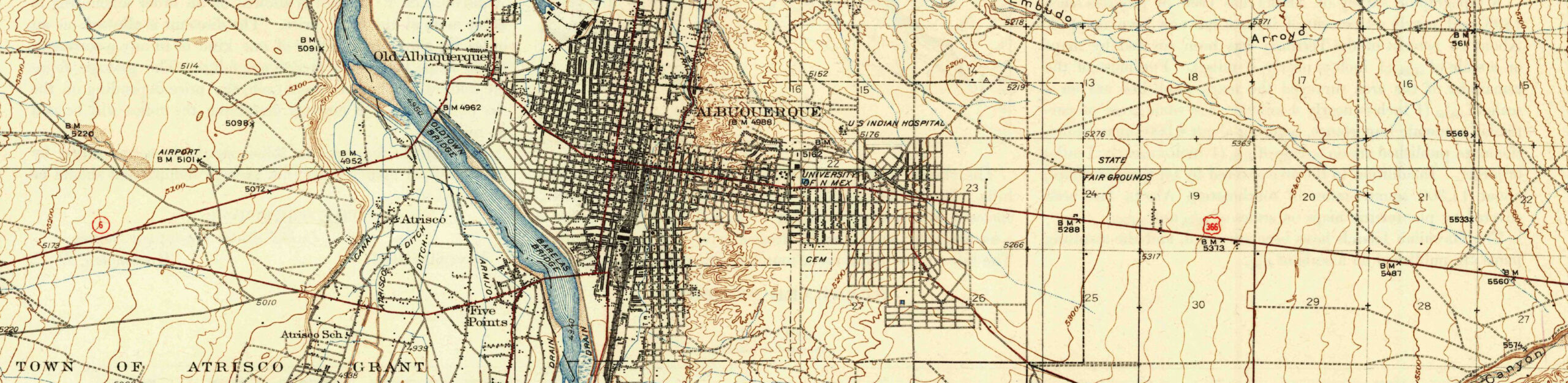

Each was its own universe, because it was difficult to switch [just like an Albuquerque newspaper is not so interesting to Los Angeles residents, and vice-versa.]

Each company had its own “installed base”, and of course those customers waned “better, faster cheaper” and 100% upward-compatible.

2) IBM became spectacularly successful by betting the company in the early 1960s, eliminating a half dozen (incompatible) product lines in favor of one, the IBM S/360, a wrenching transition for some, but that ended up creating a volume market for 3rd-party software, which really hadn’t existed before, given the fragmentation. [Of course, the now-popular “open source” idea, in which people share software, already existed in the 1950s, maybe even in the late 1940s, although in forms different than today’s. In some cases, this created very high-quality software *for free*, with which vendors had to compete, sometimes awkwardly.

3) In the 1970s/1980s, successful computer systems companies sold high-margin products, and those margins supported a lot of dedicated engineering & support resources.

4) In the 1970s, no microprocessor was performance-competitive wit h the better minicomputers. By the early 1980s, micros had gotten “good enough” to be interesting (classic Chistensen), allowing the rise of workstations & servers (Apollo, Sun, SGI, HP and others) whose price-performance was very good. Worse, by the late 1980s, the better microprocessors outperformed minicomputers.

Hence, the skill-set of designing multiple electronic logic boards to build a proprietary CPU came under pressure from the much simpler approach of buying a good micro, adding memory, and (alter) a few standardized chips, like Ethernet, and having a computer.

Needless to say, many skilled hardware engineers were not too keen on seeing their expertise apparently made obsolete, and some could come up with numerous reasons why they should not switch approaches.

Of course, this lowered the barrier-to-entry for hardware design.

5) At the same time, the UNIX operating system had emerged from Bell Labs and universities (especially Berkeley), and enabled companies to do a quick UNIX software port and quickly have access to masses of third-party software, rather than expensively building an OS from scratch, and then begging third-party software vendors to move their software to “something completely different”. Hence, another major “barrier-to-entry” disappeared, and of course, this trend accelerated with the rise of the IBM PC and Microsoft.

6) Result: most minicomputer companies disappeared, either by acquisition or by shifting to another business or by going out of business. The only major exceptions would be IBM’s minicomputer business and especially Hewlett-Packard. The latter a) got onto microprocessors early b) Got onto UNIX early, c) Built hardware that could run either proprietary OSs or UNIX.

7) Then, PCs kept getting better, to the point where they’ve displaced workstations. I used to work at Silicon Graphics, which in the early 1990s had great margins, supported many engineers, and wonderful technical support people. We had:

– computational chemists who’d written important programs, and were highly respected by pharmaceutical companies

– mechanical engineers who’d moved into computing, and could discuss the issues in detail with NASA, the car companies

– medical scientists who’d moved into computing, invaluable when dealing, say with GE Medical folks doing CAT scanners, etc.

– strong algorithms people for operations research

– experts at making program work on multiprocessor parallel computers.

That’s gone: try calling up Dell and asking to talk to their PhD biochemist who does molecular modeling…

8)The computing business is larger than ever, but it is *very* different, as it has been taken apart and reassembled:

a) People who provide serious, unique value-add, and ideally provide it cost-effectively though multiple distribution channels for as a large a volume as makes sense.

b) Owners of a distribution channel that can package what their customers need. This can be large (Microsoft, which of course does a) as well) or very small (a local value-added reseller, or a local PC fixit service).

9) Any parallels?

a) The Web provides a lot of “free” stuff, akin to the (very cheap) UNIX, current open source, etc. Competing with this stuff directly is tough, when it’s good enough [it often isn’t, but it is sometimes.]

b) There are major distributions of standard stuff: computers: Intel micros, Microsoft, Linux; in some ways, this compares to Bloomberg or AP. Doing Google news and finding N papers that carry the same AP story doesn’t make one think that a lot of value is being added.

c) Then there is unique content, which in computers still exists: support of unusual environments, or high value-add software difficult to duplicate, i.e., like Oracle, or the large numbers of industry-specific third-party software packages, or IBM mainframes, which still exist after 40+ years.

Newspapers: national/global content, like Wall St Journal, NY Times, etc …although they are all under pressure as well.

d) Then there are actually opportunities for small firms or even individuals to take advantage of the huge volumes and low overhead to deliver content/software cheaply, assuming they can figure out how to make money.

Computers: PC software, iPhone software.

Content: blogs

d) And then there is the highly-localized content and support. Computers: someone who comes and fixes your computers to run the way you need them to.

Papers: at least around here, little local newspapers seem to be doing OK. They are very low-overhead (like PCs should be), carry very targeted local ads and news, and actually do send reporters to local meetings, but have no pretense of covering national events except via AP. We’re actually covered by 2-3 of these, which is amazing.

10) But I worry about mid-size regional newspapers, which seem to be getting squeezed, just like minicomputers got squeezed between mainframes and (especially) microprocessors + standard operating systems that reduced barriers to entry. The whole computer industry moved away from integrated-proprietary + high margin business into commoditized systems, with much higher volume, but very, very different value-chains.

Around here, papers like the SF Chronicle and San Jose Mercury News are under pressure. Sometimes they can provide truly great content not available elsewhere, which really helps, and Fry’s Electronics must keep the SJMN in business with ads…

====

11) But, you’re not alone, as a shift to BEV and PHEV automobiles has much of the same shift of proprietary minis (with each level having a lot of unique design) to micros (where large varieties of systems were built from a much smaller variety of distinct parts, combined in modular ways, along somewhat different value chains.)